How to Best Describe Your GenAI Materials in Documentary Proposals: Four Approaches, From a Grant Reviewer

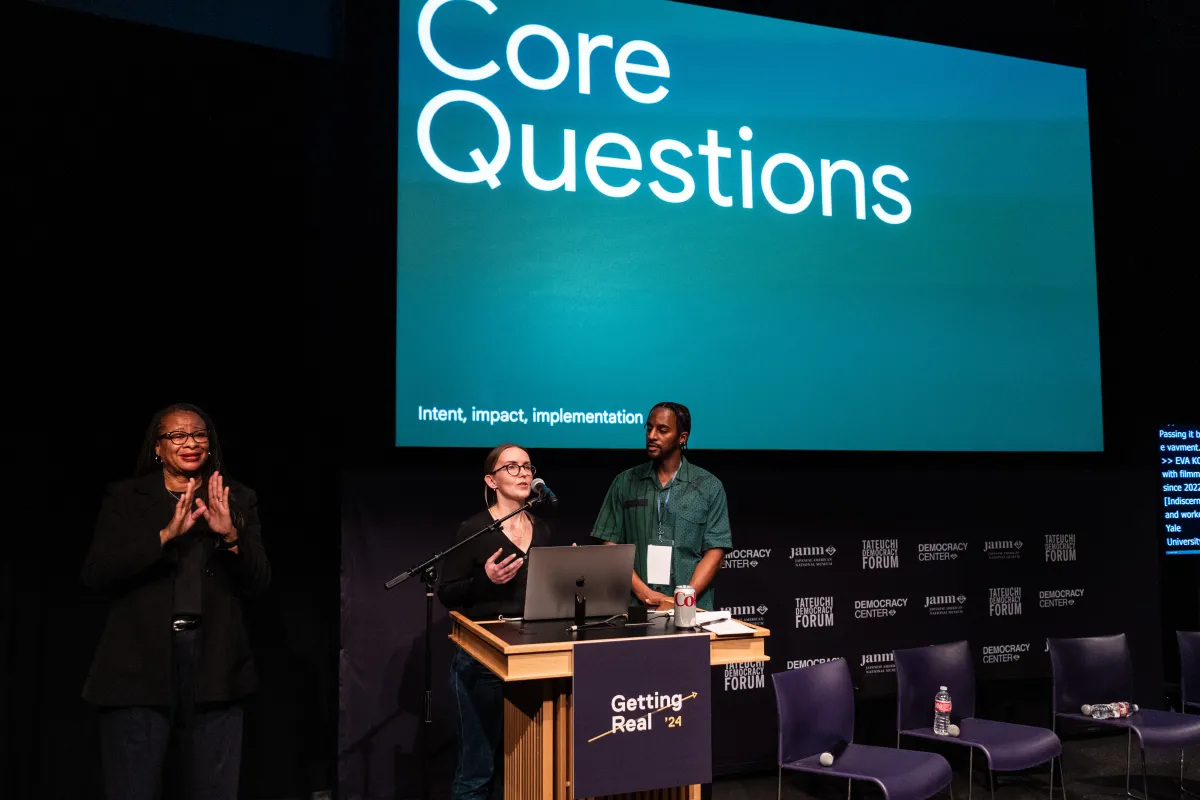

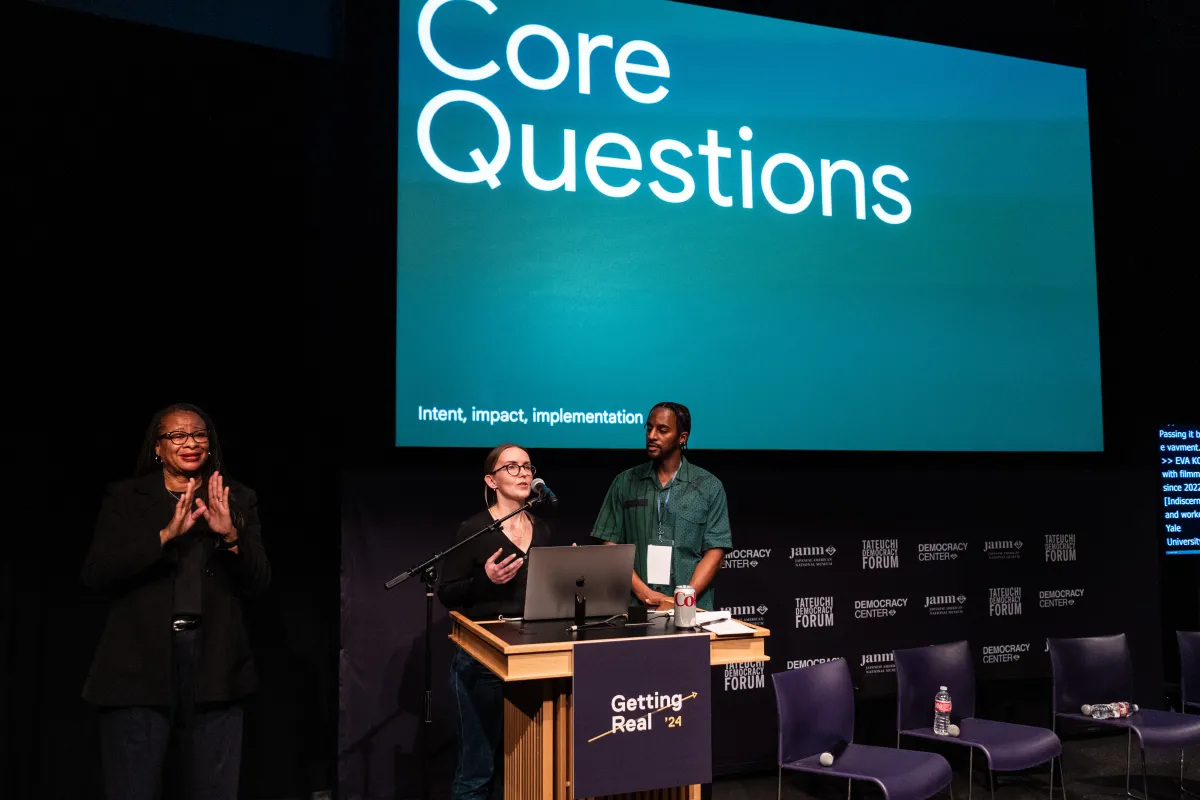

Eva Kozanecka and Will Tyner deliver their workshop on artificial intelligence at Getting Real ’24. Image credit: Urbanite LA

As a reviewer for a couple of documentary grants, the process is a lovely way to learn more about what stories my peers are exploring, what styles and forms are standing out from the pack, and to make some extra cash to subsidize the financially marginal existence that many of us filmmakers lead.

Reviewing grant applications, like everything else, has been complicated by generative artificial intelligence (GenAI, for short). After six years of reviewing projects, this year I am regularly encountering the use of audiovisual GenAI in samples for the first time—notably, mostly in projects that are not about AI.

How do we fairly assess samples and proposals that leverage artificially generated audiovisual material? And how can filmmakers put their best foot forward when engaging with GenAI materials? In this article, I will examine what various funds say are best practices for filmmakers, categorize the current use cases, and discuss how they can be improved to convey transparency and creative intention.

Funder Approaches and Industry Guidelines

I reached out to eight fund managers to see where the field stands on using audiovisual GenAI. (This piece is not focused on text GenAI in the written portions of the applications.) Given the differing review processes of funders, some may need to tailor their approach less than others.

Some funds can address GenAI questions on a case-by-case basis. For example, Perspective Fund’s process involves an initial open Letter-of-Intent (LOI) phase; only after the team feels prepared to engage a project will they request specific materials and engage in deeper conversation.

This level of attention is not possible for open call application funds that involve sample review as part of the first phase of screening. These funders are in various phases of determining whether and how they would adopt a GenAI policy.

In June, the Nonfiction Core App released an addendum to address GenAI. This addendum was developed by IDA, the Archival Producers Alliance (APA), Google’s Artists + Machine Intelligence (AMI) team, and the Sundance Institute Artist Accelerator Program. Rather than add questions to the Core App, this update is focused on adding GenAI-related prompts into existing questions where relevant. For example, noting in the “Artistic Approach” section that applicants should disclose if they are using audiovisual GenAI, and if so, to describe how they are using it. For film teams who are not using GenAI (and both the related survey results and my own observation show the majority are currently not), they can simply skip these prompts and fill out the application the same as they would have before.

The addendum does not scrutinize the use of GenAI in the writing of the application. In a written statement, the working group notes, “It has long been common practice for filmmakers to hire external grant writers to help write their applications; or to use computer software to refine their grammar or legibility—especially if English is not their first language.”

InDocs’ Docs By the Sea has the most defined approach to addressing GenAI in their applications. They already have a GenAI policy incorporated into their Submission Guidelines. This outlines mandatory disclosure requirements, as well as permitted and prohibited applications. They detail how the inclusion of GenAI impacts their evaluation framework, how they intend to implement these guidelines, and the potential consequences of violating these guidelines.

Other funders that produce films for public broadcast directed me to standards established by these outlets, such as the PBS standards for GenAI or BBC standards for AI Transparency. That is, filmmakers intending to pursue broadcast and distribution options with any journalistic outlets should comply with their standards and practices. Still other funds refer to the APA’s GenAI best practices guidelines, though this set of guidelines primarily concerns the use of GenAI output that is explicitly meant to be in the final cut of the project.

In trying to craft guardrails for GenAI in documentary, funders are balancing the needs of artists pushing boundaries in a difficult and laborious funding landscape with the protection of documentary’s essential values as a form. Addressing the need for audience disclosure, Chicken & Egg Films Senior Program Manager Elaisha Stokes writes, “I think we cross a line when we can no longer discern if the image we are looking at is a ‘true’ image.”

The Four Current Types of Audiovisual GenAI Materials

For funds that use initial screeners for review, the screeners are generally expected to spend 30–60 minutes reviewing an application, factoring in the written application, the work samples, and any prior work samples. The introduction of varying GenAI implementations can add new questions that may leave a screener figuring out how to fundamentally engage with the audiovisual material in the first place. Ultimately, I find that projects with audiovisual GenAI usage fall into four essential categories, and each comes with different considerations.

1. GenAI use in films about AI

This is the only category where we have extensive precedent. At this point, there have been a multiplicity of projects completed about GenAI that use GenAI, ranging from About A Hero (2024) to an AI-focused episode of What’s Next? With Bill Gates (2024). AI is front-and-center in these projects, so these applications inherently have to overtly communicate how and why they are using GenAI. As such, I would also describe them as those of least concern as far as the screening process goes: applicants can simply refer to the APA’s GenAI guidelines to make sure they are in compliance as relevant to the project’s usage of GenAI.

2. GenAI use in films about other subjects

Similar to the previous section, the APA Guidelines are very useful, since they relate to the final usage of the output materials. The essential difference is in justifying purpose, since it’s not self-evident within the subject matter itself. Reasonable justifications may include:

- Voicing written materials.

- Voicing a deceased person.

- Expanding an existing image with generative fill.

- Creating sequences that otherwise would require the employment of VFX, GFX, or animation artists.

When reviewing these usages, I try to follow the APA Guidelines in considering the ethics of personhood and factuality. Is the person whose voice is used to generate the audio able to consent? Is the estate of a deceased person able to consent to the recreation of their voice? Is generative fill creating historical inaccuracies or altering the context of the original image?

Where I think there is space for further interrogation lies in the final example: Are we using GenAI to avoid employing other fellow artists? Aside from the audio cleanup tools that come with editing programs, there are uses of GenAI that can be complementary to VFX, GFX, and finishing work—many programs have been in use by VFX and post houses for years now. These tend to be proprietary, however, meaning the models are trained on the existing process and pipeline of a studio so as to maintain quality standards. Cost-cutting is tempting when working with constrained documentary budgets, but doing so without consideration may validate the notion that creative work is replaceable. This cost-cutting notion may also be interrogated in later stages of review.

3. GenAI use as placeholder

Luke Moody, head of the BFI Doc Society Fund, notes that GenAI could help solve a fundamental contradiction in documentary funding: early-stage projects often lack resources to create the polished materials that funders expect to see. “GenAI may support economic accessibility of this systematic expectation, and accessibility more broadly,” he observes.

This type of prototyped usage can offer reviewers a taste of what filmmakers hope to create once funded:

- Conduct shoots.

- Employ animators, VFX, and GFX artists.

- R&D or production travel.

- Finalize and re-record VO.

It’s understandable why filmmakers may want to use GenAI in early project samples, even without intending to use it in the finished project. Still, it’s important to have transparency around which shots are GenAI and what decisions the filmmakers made in order to generate them. Just as with any other creative decision, it’s helpful to understand the thought process around the GenAI usage from a creative angle. Why did you use this specific GenAI program? Why are these specific shots GenAI?

Docs By the Sea’s GenAI policy was the only one I reviewed that explicitly addresses placeholder usage. It explicitly permits the use of GenAI materials as placeholder, but only if they’re’ clearly labeled as such. Applicants must also disclose other information, such as the GenAI program used, the purpose, and the intended integration.

From a screener’s standpoint, one issue with GenAI placeholder materials is that these often look the same. “Photoreal” GenAI isn’t yet at a level where it looks fully real—there is a sort of video-game movement and texture to much of it that is pretty easy to identify, and these shots often default to similar slow tracking camera movements. After reviewing applications that likely all used Runway for visual materials, these shots felt more connected to each other than to their respective projects.

One solution is using GenAI that’s trained on specific source materials—for example, the director’s prior work—which maintains aesthetic consistency. However, this approach isn’t necessarily accessible, as it’s more expensive than using ready-made energy-intensive options that mine the internet for audiovisual material. In addition, this approach may not be viable if the filmmakers are making a new type of film for the first time, want to evolve their artistic approach from prior work, or don’t have enough prior work for training.

As a reviewer, I have other questions, especially if I don’t know the exact prompts used to generate the material. Did the filmmakers prompt the lighting, camera angle, lens, compositional balance? Are there cuts that were generated by the GenAI program or was it cut together by the editor? Those answers affect my considerations of purpose and the project’s intended artistic style.

4. GenAI with an unclear role

I’ve reviewed multiple projects in which GenAI is not mentioned in the written application despite appearing in the sample. Both the APA guidelines and the Docs by the Sea requirements state this is unacceptable. While image-based GenAI is currently detectable, this will change as programs grow more sophisticated. GenAI audio and text outputs are much harder to detect already.

Reviewers depend upon filmmaker transparency to understand not only what materials comprise the sample, but also:

- What artistic decisions are being made?

- What will the final film look like?

- What kind of work has already been done?

- What sorts of resources do they intend to draw from?

If proposals present intentional ambiguity between reality and nonreality, that can be compelling for the audience. When applying to funds, however, this approach seems needlessly confusing—funders are partners who should understand the experiment, not be subjected to it. Transparency also protects filmmakers from unintended criticism; it’s to the benefit of the filmmaker for reviewers to know GenAI details in case the reviewers don’t like lighting or camera movement that was unintended.

***

Before starting any review, I always think, “I hope this application is clear.” It’s far easier to understand a film team’s intentions when the essence of the project isn’t buried by verbiage and ambiguity. Following standard expectations for GenAI and being transparent about its usage in your projects can reduce those misunderstandings. Filmmakers can enter the application process knowing how to communicate about GenAI in a succinct and effective manner. It reduces guesswork.

As nonfiction filmmaking grows more hybrid and our world grows more artificial (almost certainly not a coincidence), standards help both filmmakers and screeners focus on the promise of proposed projects.

Daniel Larios is a filmmaker, writer, and program manager based in Los Angeles. He also reviews applications for funders such as Catapult and the Sundance Institute Documentary Film Program.